Why you may want to chunk files?

The biggest reason for me to upload files in chunks, is because I want to upload very large files; pictures, videos, whatever… This means, I want to know the status of the upload as it progresses and if I can’t finish the upload now, I want to be able to pause, go to my favourite coffee shop and continue on there.

Let’s build a simple app using no additional javascript libraries!

Charisse Kenion

Charisse KenionTo upload a file in chunks, we need to make our browser/client aware of the progress of the file upload. So, most of the logic for the chunking process will live on the client-side. Let’s start with a simple React component that currently has no logic but has a form that allows us to select multiple files.

Let’s create a class that will keep track of all the details about the file and its progress; we will also put all the methods we need inside.

What’s inside? chunkSize is the size of each chunk you wish to send to the server. uploadUrl is the path to your server-side endpoint (more on that later). file simply refers to the file you have selected to upload. Getting to where all the magic happens, currentChunkStartByte is where we keep track of where the starting point of the current chunk is located. currentChunkFinalByte is where we keep track of the location of the final byte of the current chunk.

Inside the constructor we instantiate the browser built-in XmlHttpRequest class and set the Mime Type to application/octet-stream, which simply refers to generic binary data. currentChunkFinalByte is set to either the chunkSize or the file size, whichever is smaller. This is to avoid chunking of small files.

Let’s add the method that will do all the uploading.

uploadFile() {

this.request.open('POST', FileToUpload.uploadUrl, true);

let chunk: Blob = this.file.slice(this.currentChunkStartByte, this.currentChunkFinalByte); // split the file according to the boundaries

this.request.setRequestHeader('Content-Range', `bytes ${this.currentChunkStartByte}-${this.currentChunkFinalByte}/${this.file.size}`);

this.request.onload = () => {

const remainingBytes = this.file.size - this.currentChunkFinalByte;

if(this.currentChunkFinalByte === this.file.size) {

alert('Yay, upload completed! Chao!');

return;

} else if (remainingBytes < FileToUpload.chunkSize) {

// if the remaining chunk is smaller than the chunk size we defined

this.currentChunkStartByte = this.currentChunkFinalByte;

this.currentChunkFinalByte = this.currentChunkStartByte + remainingBytes;

}

else {

// keep chunking

this.currentChunkStartByte = this.currentChunkFinalByte;

this.currentChunkFinalByte = this.currentChunkStartByte + FileToUpload.chunkSize;

}

this.uploadFile();

}

const formData = new FormData();

formData.append('file', chunk, this.file.name);

this.request.send(formData); // send it now!

}Putting these together, your FileToUpload.ts will look like this.

Let’s use what we created inside our component

Now, we have a class to represent the file to be uploaded. We can instantiate this class as many times as needed inside the component. The resulting component looks like this.

Our client-side code is now sending chunks, let’s prepare the backend using Go and Gin

Sven Brandsma

Sven BrandsmaStarting with the typical Gin setup, we add one endpoint /photo

Then we handle the request:

func uploadFile(c *gin.Context) {

var f *os.File

file, header, e := c.Request.FormFile("file")

if f == nil {

f, e = os.OpenFile(header.Filename, os.O_APPEND|os.O_CREATE|os.O_RDWR, os.ModeAppend)

if e != nil {

panic("Error creating file on the filesystem: " + e.Error())

}

}

if _, e := io.Copy(f, file); e != nil {

panic("Error during chunk write:" + e.Error())

f.Close()

}

if isFileUploadCompleted(c) {

uploadToGoogle(c, f)

if e = f.Close(); e != nil {

panic("Error closing the file, I/O problem?")

}

if e = os.Remove(header.Filename); e != nil {

panic(fmt.Sprintf("Could not delete file %v", header.Filename))

}

}

}The logic here is fairly straightforward:

- Open the file or create if not there. os.O_APPEND tells the OS that writes append to the file, so there is no need to offset to the end of the file. os.O_RDWR opens the file for both reads and writes. os.ModeAppend only allows writing via appending to the file.

- Then, we write/append the chunk to the file.

- We check if the file upload is completed. We do this by checking the range of bytes in the headers.

- Finally, we upload the file to Google Cloud.

To check that the file is finished uploading, we parse the Content-Range header we sent from the browser.

func isFileUploadCompleted(c *gin.Context) bool {

contentRangeHeader := c.Request.Header.Get("Content-Range")

rangeAndSize := strings.Split(contentRangeHeader, "/")

rangeParts := strings.Split(rangeAndSize[0], "-")

rangeMax, e := strconv.Atoi(rangeParts[1])

if e != nil {

panic("Could not parse range max from header")

}

fileSize, e := strconv.Atoi(rangeAndSize[1])

if e != nil {

panic("Could not parse file size from header")

}

return fileSize == rangeMax

}The upload to the cloud here is GCP specific, but I will summarize it here if anybody needs it:

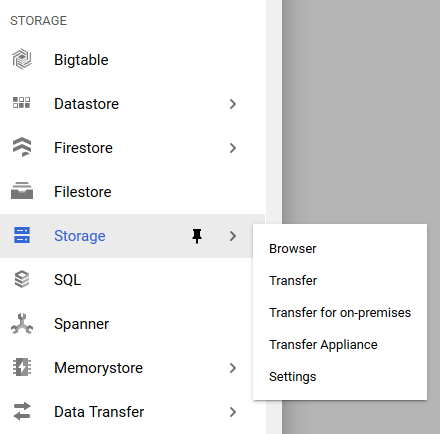

- Create a bucket under Storage in Google Cloud Console. I used Uniform Access Control, but if you need finer access control you can choose the other option.

Create a service account to allow access to your resource. This will allow you to generate a json file which stores your credentials. Put this file somewhere in your filesystem and create an environmental variable GOOGLE_APPLICATION_CREDENTIALS to point to this json file. Google provides some instructions here. I provide my own simple instructions for Windows here.

Use code like this to upload your file.

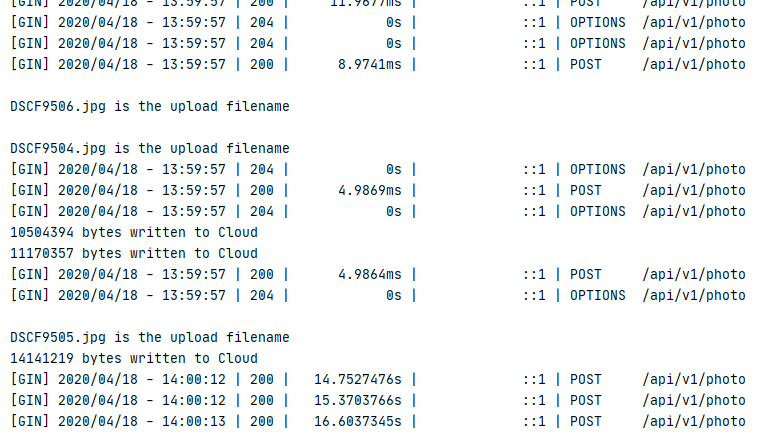

func uploadToGoogle(c *gin.Context, f *os.File) { creds, isFound := os.LookupEnv("GOOGLE_APPLICATION_CREDENTIALS") if !isFound { panic("GCP environment variable is not set") } else if creds == "" { panic("GCP environment variable is empty") } const bucketName = "el-my-gallery" client, e := storage.NewClient(c) if e != nil { panic("Error creating client: " + e.Error()) } defer client.Close() bucket := client.Bucket(bucketName) w := bucket.Object(f.Name()).NewWriter(c) defer w.Close() fmt.Println() fmt.Printf("%v is the upload filename", f.Name()) fmt.Println() f.Seek(0, io.SeekStart) if bw, e := io.Copy(w, f); e != nil { panic("Error during GCP upload:" + e.Error()) } else { fmt.Printf("%v bytes written to Cloud", bw) fmt.Println() } }

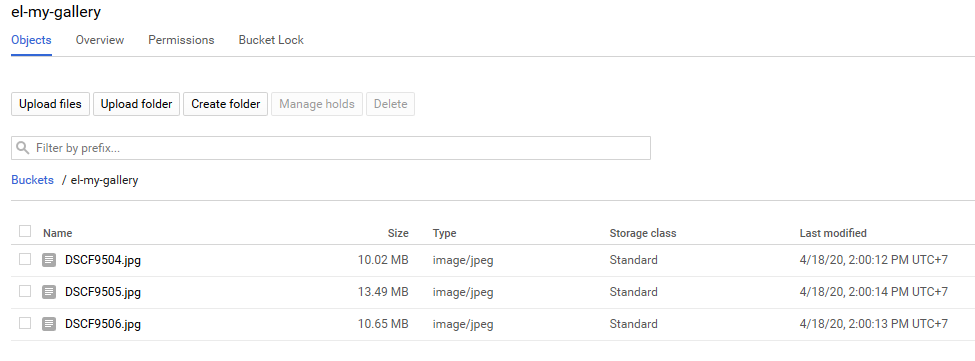

A few things to mention here. bucketName is the name of the bucket you created in step 1. If you forget to close your writer, the upload will still run successfully, but the file will not show up in Google Storage. f.Seek(0, io.SeekStart) is needed to rewind the reader to the beginning, because we just finished writing to the end of the file.

Let’s test!

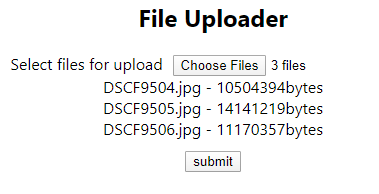

Submit the form with a few files.

Golang console output:

Google Cloud Platform result:

Great everything worked :)

Viktor Talashuk

Viktor TalashukSummary

I think this solution worked out quite well, but there are some things to consider. When using this solution in production, there is a small chance of filename collision; I recommend adding a unique Guid to the name or a userID in multi-tenancy solutions.

Initially I tried to build this app using a more efficient approach, *without saving the file to the filesystem* and appending each chunk to Google Cloud directly as it came in. Unfortunately, I ran into a GCP limitation here. At the time of writing, the maximum allowed update rate of any 1 file is 1 per second. Anything above this rate fails to upload. Therefore, I had to fall back to using the filesystem to put the chunks together.